Somewhere in your company, right now, someone might be using an AI tool without your knowledge. Maybe it’s a marketer drafting headlines with Gemini, an engineer summarizing reports with Claude, or a sales rep testing an AI spreadsheet plugin. None of them asked for approval – they just wanted to work faster.

This is the scenario playing out in offices everywhere. A marketing specialist, under pressure to deliver a client proposal, copies important client data into a free chatbot to get a draft fast. The result: quicker turnaround and an impressed client. But no one in IT or management knows how the tool was selected, whether it’s secure or what happens to that client’s sensitive data.

Welcome to the world of Shadow AI: the employee-driven, unapproved use of Artificial Intelligence tools in the workplace. This phenomenon is growing fast, and it matters to everyone. Especially for professionals working in B2B (whether you’re an engineer designing control units, a marketer building campaigns, or an entrepreneur launching a startup), this trend is fundamentally reshaping how work gets done.

In this article, we’ll pull back the curtain and take a closer look on this growing trend. We’re going to explore what the data actually tells us about how employees are using AI on their own, and why they are adopting these tools without waiting for official approval. Crucially, we’ll uncover the hidden risks and blind spots this creates for many organizations. To make this real, we’ll walk through concrete scenarios showing exactly how this plays out in practice, and then give you practical steps on what companies (especially SMEs) can do to respond smartly and turn a potential risk into a true advantage. Finally, we’ll touch on what the future may bring in this space.

Let’s dive in. By the end of this article, you’ll be equipped not just to understand the rise of Shadow AI, but to act on it and ensure your business stays secure and productive!

What the Data Shows: Scale and Key Trends

The numbers don’t lie: Shadow AI is not just a phenomenon. It’s actually already the norm!

A recent, large-scale survey of knowledge workers across the US, UK, and Germany reveals the astonishing scale: a massive 75% of employees are already using AI in their jobs. Here’s the kicker: more than half of those users (meaning roughly 50% or more of your total staff) are relying on tools not issued or approved by the company. (Source: SoftwareAG)

The Employee Drive: Speed and Ease

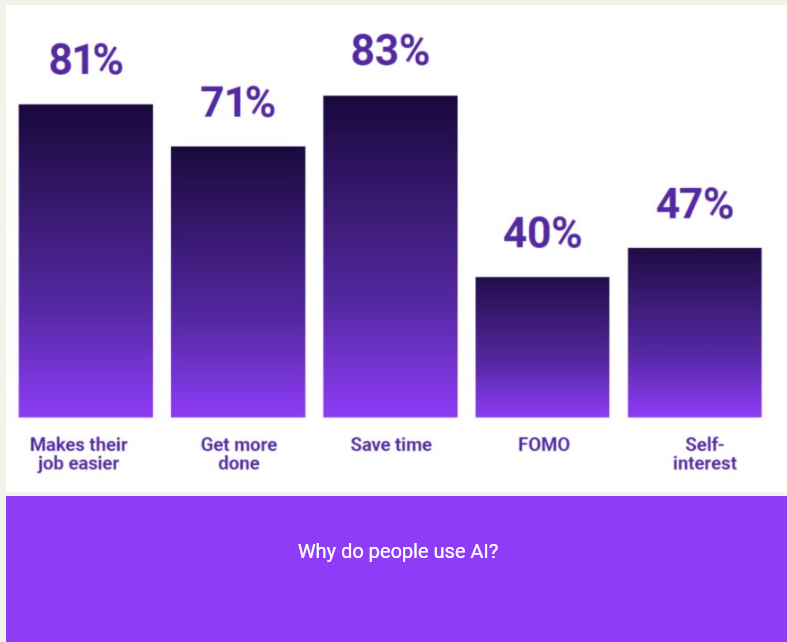

The big question here is: Why are employees taking matters into their own hands? The reasons are simple: they want to work better, faster, and easier. The main motivations cited by users are saving time (83%), making the job easier (81%), and directly improving their productivity (71%). For the individual employee, this is a clear win. (Source: SoftwareAG)

The Organizational Blind Spot

However, this widespread adoption creates a massive blind spot for organizations. The average company, according to a recent security study, is dealing with as many as 254 distinct AI applications in a single quarter, many of which are completely unsanctioned. This lack of oversight has tangible consequences. (Source: Harmonic Security)

In fact, nearly 80% of IT leaders report having suffered negative outcomes from employee use of generative AI. The risks are real and immediate:

- 46% cited false or inaccurate results (a major risk for client-facing work).

- 44% reported data leaks. (Source: TechRepublic)

And that’s not all! These risks are amplified by employee habits. One survey found that 68% of enterprise AI users accessed publicly available tools via personal accounts, and 57% admitted to entering sensitive or confidential information into those tools. (Source: TELUSDigital)

In short: Employee-driven AI usage at work is widespread and growing rapidly, happening largely outside formal oversight. While it provides an undeniable productivity boost, it also exposes organizations to significant, measurable risks.

Why Employees Are Adopting AI on Their Own

It’s easy to see why staff are turning to AI tools independently. Of course they aren’t trying to be malicious. They’re just trying to be effective. This self-driven adoption is powered by a few key, human-centric drivers, which anybody can understand:

The Path of Least Resistance: Ease and Speed

The first major driver is simple: ease of access! Many of the most powerful AI tools available today (like generative chatbots, summarization tools, or coding assistants) are free or low-cost and can be accessed with a simple browser login. Employees don’t need to go through lengthy, frustrating internal procurement or IT approval processes. When the friction is low, adoption happens fast.

Frustration with Official Tools

The flip side of easy external access is often frustration with internal tools. Employees frequently find the officially provided platforms slow, less intuitive, or simply not aligned with their immediate, day-to-day needs. If the company tool requires lots of setup, mandatory training, or feels too “locked down,” users will naturally prefer a simpler external option. Who doesn’t want to take the path of least resistance? This isn’t just speculation: a relevant survey found that 33% of those using Shadow AI did so precisely because their employer did not offer the tools they needed. (Source: SoftwareAG)

The Pressure to Perform

In modern B2B roles, productivity pressure is intense. Whether you’re an engineer designing complex parts, a marketer under a campaign deadline, or a developer racing through a sprint, making things faster matters. When an employee discovers an AI tool that can summarize massive amounts of data, generate a first draft in minutes, or instantly speed up code writing, they adopt it for a clear competitive edge. This benefit isn’t just about the current workload, either: nearly half of users (47%) reported they believe using these AI tools will help them get promoted faster. The prospect of a promotion and increased pay is undeniable tempting. (Source: SoftwareAG)

Autonomy and Innovation

Finally, this trend taps into a deeper desire for autonomy. Many professionals simply want the independence to choose the tools that work best for them. One survey found 53% of knowledge workers preferred having their own choice in selecting their professional tools. In fast-moving companies or startups, this self-starter approach often feels natural. It’s part of a healthy, “let’s just get it done” culture. (Source: SoftwareAG)

Together, these four factors (ease of use, tool dissatisfaction, productivity demands, and a desire for autonomy) powerfully explain why employee-led AI adoption is accelerating, even when it is unofficial.

The Hidden Risks and Organizational Blind Spots

While employee-driven AI usage provides an undeniable energy boost, it also introduces significant, measurable risks, especially when left unmanaged. When employees go rogue with tools, the organization develops critical blind spots:

The Visibility and Governance Gap

The most basic problem is simple: organizations don’t know what they don’t know. Many security professionals (a mere 28%, according to one survey) believe their organizations have clear policies or training around the use of unsanctioned Generative AI. This lack of clear guidance leads to a severe visibility gap. If a company can’t see which tools are being used, what data is being fed into them, or how the results are being incorporated into workflows, it becomes impossible to audit or govern that activity.

Data Security and Compliance Catastrophe

This visibility gap directly translates into major data security and compliance risks. Entering company, client, or sensitive data into publicly available AI tools can instantly violate internal privacy, intellectual property, or regulatory policies.

The evidence is alarming:

- A majority of employees (57%) have admitted to entering sensitive information into Generative AI assistants.

- The consequences are already being felt, with 44% of IT leaders citing the leakage of sensitive data as a direct outcome of employee-driven AI use.

This behavior turns personal client data into an asset sitting outside the company’s secure walls, leading to a potential compliance catastrophe.

Accuracy and Reliability Issues

Unapproved AI tools may deliver outputs that are inaccurate, misleading, or outright false, a phenomenon known as “hallucination“. Without proper, official checks and balances, these errors can be imported into mission-critical work. This risk is already widespread: 46% of IT leaders cited false or incorrect outputs from employee AI use, which can lead to costly decision-errors, rework, wasted time, and significant trust and reputational damage. (Source: TechRepublic)

Cultural Fallout and Fragmentation

When employees feel they must operate “underground” with their tools, it slowly chips away at trust, collaboration, and alignment with overall company strategy. If one department uses a shadow tool and shares the output with another team that is unaware of the process, workflows can quickly become fragmented and inconsistent. As industry analysis suggests, this resulting fragmentation “hurts efficiency, compliance and consistency of key services.” (Source: SoftwareAG)

The Productivity Paradox

Ironically, the pursuit of productivity can become a paradox. Without proper oversight or training, some employees may end up spending more time learning how to effectively prompt and manage an unfamiliar AI tool than they save doing the actual task. In these cases, the promised productivity gain doesn’t materialize, yet the organizational risk from data exposure still accumulates.

Concrete Scenarios: Where Shadow AI Plays Out

To truly understand Shadow AI, it helps to see it in action. These two realistic scenarios illustrate the core dilemma: high productivity gains balanced by hidden, unmanaged risk.

Scenario A: The SME Marketing Firm

Imagine a small, 25-person digital-marketing agency that hasn’t yet implemented a corporate-wide generative AI tool. A senior marketer, facing pressure to produce a lengthy blog series, turns to a free, public chatbot. They copy in confidential client data (brand values, audience insights) and ask the AI to generate three drafts. The work is delivered quickly, polished, and the client is satisfied, a win for speed and creativity, right?

The Reality Check: While the marketer gained crucial speed, that client data was entered into a public AI tool entirely outside the agency’s governance. Management has zero visibility into which tool was used, how the client data is stored, or what happens to the inputs and outputs. If that third-party tool retains data or uses it for model training, the agency’s competitive client information could be exposed. Something you should avoid at all costs! Furthermore, this creates not only damage to the clients information but creates also organizational inconsistency: other team members, hearing about the tool, may try to use it without knowing the process or the risks.

Scenario B: The Manufacturing Design Team

Now consider an engineering firm where a design team is working on a new control-unit module. One engineer decides to use an unapproved AI-powered code assistant to quickly handle the boilerplate firmware code. They upload parts of the personal spec document to the tool to generate the helper functions. Time is saved, and the initial result looks good.

The Hidden Cost: Later, during the quality assurance (QA) phase, reviewers discover that the generated code contains subtle, critical security vulnerabilities. Why? Because the AI model was trained on flawed, public code patterns. Fixing this issue requires expensive, time-consuming rework, and the firm realizes the unapproved tool introduced risk far outweighing the initial productivity gain. The engineering department must now decide whether to rebuild controls, ban the tool entirely, or try to sanction it. All costly and time-consuming efforts.

Key Takeaways from the Examples

These scenarios highlight the dual nature of employee-driven AI. In both cases, the employee was motivated by a genuine desire for speed and flexibility, leading to immediate productivity gains. However, the lack of organizational oversight meant that the security vulnerability and data risks arrived after the fact. Organizations are left with the burden of rectify and governance rebuilding, confirming that the high promise of Shadow AI always comes with a hidden cost and risk.

The Roadmap: How B2B and SME Firms Should Respond to Shadow AI

Given the reality of widespread Shadow AI and the associated risks, organizations (especially B2B firms and SMEs) cannot afford a purely restrictive approach. The goal is to shift from a state of fear and prohibition to one of governed enablement. Here are the important steps you can do:

1. Recognize and Accept the Reality

The first step is simple: recognize that it’s happening. Accept the fact that roughly 50% of knowledge workers are already using non-company-issued tools. Instead of viewing all unsanctioned usage as rule-breaking, treat it as a powerful signal: your employees are actively seeking solutions to boost their productivity. This gap isn’t just a threat. It’s an opportunity for improvement!

2. Create Clear, Transparent Policies

Restrictive policies only drive AI usage further underground. To gain visibility, you must be transparent, not disciplinary:

- Draft clear usage guidelines that define which types of tools are approved, what category of company or client data may (or may not) be entered, and how AI outputs can be shared.

- The aim is to demystify the rules, not just ban tools. With only about 36% of security professionals reporting clear policies around Shadow AI, establishing yours is a major competitive advantage in managing risk.

- Ensure every employee understands how these policies apply to their everyday tasks.

3. Provide User-Friendly, Approved Alternatives

Employees go rogue when official tools are inconvenient or insufficient. If your internal platforms are slow or unintuitive, they will always lose to the free, fast option:

- Invest in user-centric platforms that meet the demand for speed and ease. The data shows this is essential: 33% of users take matters into their own hands because their employer lacks the right tools.

- Work directly with key user groups (Marketing, Engineering, Operations) to identify their most-needed functions and then make a list of safe, approved options easily accessible. This is the most effective way to reduce Shadow AI.

4. Train and Empower Employees

Another important step is to frame training as a productivity enabler, not just risk mitigation. Why? Employees will engage when they see a direct benefit:

- Offer short, practical training sessions covering effective prompting, how to critically evaluate AI outputs for accuracy, and how to recognize the risk of data leakage.

- Educate staff on basic security practices, such as “why few users perform security scans before using new AI tools” (only 27% according to a recent study), and “how to avoid uploading private data via personal accounts”.

5. Monitor, Iterate, and Align

Governance is a continuous loop, not a one-time project:

- Implement auditing tools or dashboards to detect usage patterns: Which departments are interacting with which tools? What type of data is being uploaded (e.g., in one study, about 45% of sensitive AI interactions came from personal email accounts)?

- Designate an “AI champion” or a cross-functional team, even in smaller firms, to watch trends, respond to queries, and act as a connection between users and IT/security.

- Use the feedback to refine your policy and approved tool list constantly.

6. Formalize What Works: Leverage the Upside

The final step is to leverage employee ingenuity. Stop only policing and start formalizing what works:

- Treat the AI tools employees are already gravitating toward as signals of high-impact use cases. If e.g. the Marketing team is efficiently using a certain AI assistant for draft creation, explore how that tool can be formally sanctioned, integrated, and supported.

- This process turns a risky “rogue” workflow into a documented, repeatable one, effectively reducing variance and risk while protecting the hard-won productivity gain.

- Identify 2-3 high-impact use cases (e.g., report summarization, code boilerplate, email drafting) and create official “AI Workflows” with evaluated tools and best practices.

Key Action Plan: Summary for SMEs/B2B Firms

The shift from prohibition to enablement requires a structured plan. The following table consolidates the key strategic actions into a checklist for managing Shadow AI effectively:

| Action | Why it matters |

| Recognise employee-driven AI exists | Accepting reality is the first step to managing it. |

| Draft clear AI use policies | Prevents confusion and builds shared guardrails. |

| Provide approved tools that work well | Reduces the motivation to use unsanctioned tools. |

| Train employees for safe & effective use | Improves output quality and drastically reduces risk. |

| Monitor and refine workflows | Ensures continuous alignment with business goals and risks. |

| Formalise high-value workflows | Turns “shadow” gains into documented, repeatable processes. |

Future Outlook: What to Expect in the Next 2–3 Years

Looking ahead to the period of 2025–2027, the trend of employee-driven AI adoption is set to intensify, bringing several key themes to the fore:

The Blurring Lines and New Skills

The most immediate change is the further embedding of AI into workflows. As generative AI becomes seamlessly integrated into familiar tools, the line between “official” and “personal” resources will blur, giving organizations that enable safe, aligned use a significant productivity edge.

In this environment, technical infrastructure alone won’t be good enough. Human skills and governance will be the true differentiators. Success will depend on people’s AI knowledge (skills like expert prompt-crafting and critically evaluating outputs) backed by robust governance structures around data handling and tool evaluation. Consequently, we will see the rise of “AI-first” job roles. Some jobs will explicitly include AI-tool usage as a core skill, moving beyond “using Word/Excel” to managing and curating AI interactions.

New Challenges and Regulatory Pressure

Compounding this shift is the expected emergence of more agentic and personal AI assistants. As these tools become highly personalized and autonomous, employee usage will multiply, creating greater visibility and compliance challenges for IT and security teams.

Simultaneously, the regulatory world is catching up. Regulatory and compliance pressure will intensify as data laws, intellectual property norms, and model-training ethics mature. This means employee use of unsanctioned tools will attract greater scrutiny and potential financial consequences.

The Defining Trade-Off

Ultimately, the future of work will be defined by the productivity payoff versus the risk trade-off. Firms that choose to ignore Shadow AI will risk resource waste, data breaches, and organizational silos. In contrast, those that embrace guided adoption and integrate employee ingenuity will capture compounding gains and be far better positioned for the next phase of digital business.

In short: Shadow AI is a definitive signal of how workplace productivity and culture are evolving. Businesses that proactively align with (rather than fight) this trend will be better positioned for the future.

Conclusion

Employee-driven AI usage at work (often dubbed Shadow AI) is already a major, irreversible workplace phenomenon. For professionals like engineers, marketers, and developers, it offers undeniable value: speed, flexibility, and creativity. Yet for organizations, particularly in B2B and SME contexts, it raises critical risks: significant governance gaps, data exposure, and potential workflow fragmentation.

The key takeaway is this isn’t simply a matter of banning tools or strictly enforcing rules. It is fundamentally about recognizing the trend, strategically aligning policies and tools with user behaviors, providing safe alternatives, and successfully turning informal, high-value productivity gains into formalized, documented workflows.

A Final Checkpoint

To initiate this essential shift, ask yourself (and your leadership team) these critical questions:

- What AI tools are my employees already using?

- Are they sanctioned? More importantly, are they secure?

- If not, why not, and what user-friendly, approved alternative could I provide?

- How can I effectively enable productivity while managing organizational risk?

By proactively answering these questions, you will not only keep pace with the evolving workplace, but you will also help turn employee initiative into a managed, organized, and compliant competitive advantage.

Use our checklist to manage Shadow AI risks and stay always one step ahead!

Frequently Asked Questions (FAQ)

Q1. What exactly is “Shadow AI”?

Shadow AI refers to the use of AI tools by employees without formal approval or oversight from their organization’s IT or security function. These are typically tools accessed via a free trial, personal accounts, or readily available public platforms.

Q2. Isn’t any AI tool just productivity-boosting? Why worry?

While many tools indeed deliver productivity gains, if they are unsanctioned, they can introduce severe data leaks, compliance issues, and produce inaccurate outputs that lead to inconsistent workflows.

Q3. My company is small—is this relevant for SMEs too?

Definitely! SMEs often have fewer established controls and more agile, “get-it-done” cultures, which can significantly accelerate the adoption of unsanctioned tools. By providing the right tools, training, and policies early, SMEs can capture the productivity gains while keeping their data and compliance risks manageable.

Q4. How can I start implementing an AI tool governance framework?

Start with a six-step iterative process:

- Audit: Ask employees what tools they currently use.

- Policy: Define clear rules for AI usage and data handling.

- Tool-Selection: Identify a small set of approved AI tools that meet core user needs.

- Training: Provide short modules on safe and effective AI use.

- Monitoring: Set up visibility (even simple dashboards) to track AI tool-usage patterns.

- Iterate: Refine your policy and tool list based on feedback and usage data.

Q5. Will banning unsanctioned AI tools stop Shadow AI?

Unlikely. Studies show that a blanket ban alone is insufficient because employees will simply find and use tools anyway if they offer a significant value proposition. The most effective approach is to enable safe alternatives and align with the productivity demand rather than fighting it.